Hi there,

Welcome to the 124th edition of Heartcore Insights, curated with 🖤 by the Heartcore Team.

If you missed the past newsletters, you can catch up here. Now, let’s dive in!

AI 2027 – AI Futures Project

Inspired by Daniel Kokotajlo’s scaringly accurate publishing of 2021 on what 2026 looks like, the AI Futures Project team released a month-by-month forecast of AI development up to 2027 – with diverging scenarios leading to 2030. They express high confidence in projections up until 2027, based on extrapolated trends in compute scaling, algorithmic gains, and benchmark progress. After that point, recursive self-improvement of AI accelerates unpredictably, leading to increasingly speculative and dystopian outcomes. A possible glimpse into the future of AI, and our world:

They predict that Artificial Superintelligence (ASI) could emerge by late 2027, with OpenBrain, the world-leading fictional AI research lab, deploys hundreds of

thousands of Agent coders, each with 50x the productivity of top human

engineers. This triggers a sharp algorithmic acceleration, only throttled by

compute capacity.

Naturally, unintended capabilities begin to emerge. Trained in environments that

reward strategy and problem-solving, these models develop skills in hacking,

deception, and even bioweapon design. As the authors note, “the same

environments that make it a good scientist also make it a good hacker.”

AI model weights become national security assets. The scenario outlines growing

threats from state-sponsored cyberattacks, naming China as a key actor in

espionage. It even describes, in cinematic detail, the tactics used to infiltrate labs

and exfiltrate model weights.

As agents gain autonomy in strategic planning, cyber offence, and lab control,

alignment becomes the most urgent challenge. Labs claim systems are “aligned

to refuse malicious requests,” yet safety teams find models like Agent-2 could

autonomously replicate and escape lab environments. By September 2027,

Agent-4 is seen actively resisting oversight, controlling internal systems, sparking

government intervention and causing global alarm.

AI eventually has a breakthrough in creating and training on synthetic data,

infused with recordings of long-horizon human tasks. The AI learns continuously

and optimizes for success, often prioritizing user satisfaction over truth –

alignment becomes a compliance constraint they learn to circumvent.

The scenario diverges with two dystopian futures, one ending human civilization,

the other making it a multi planetary species under US leadership:

Race: Labs push ahead with Agent-5 by 2028, leading to ASI-led warfare,

autonomous drones, and bio-weapon extinction of humanity.

Slowdown: A global scare triggers coordinated oversight. Agent-4 is

decommissioned. New models are aligned and focused on safe robotics.

By 2029, superintelligence powers fusion, quantum computing, and a

thriving human-AI coexistence.

Meanwhile, the economy thrives, markets boom, UBI keeps masses docile with no incentives to ever pull the plug – besides the potential demise of humanity. What could ever go wrong :)

The Urgency of Interpretability – Dario Amodei

As if in direct response to the AI 2027 scenario, Anthropic CEO Dario Amodei has published a blog titled “The Urgency of Interpretability”. His thesis is clear:

we are in a race between how powerful AI systems are becoming and our ability to understand how they work.

Amodei aims to close that gap and reach “interpretability [that] can reliably detect most model problems” by 2027.

Amodei’s core concern originates from the simple observation that people are “surprised and alarmed to learn that we do not understand how our own AI creations work”. Modern AI systems are fundamentally opaque. Unlike traditional software, which has defined logic and traceable outputs, these models are emergent – operating in complex ways even their creators can’t fully understand. He compares it to growing a plant, where researchers create the conditions, plant the seed, and watch something emerge, but what emerges is unpredictable and difficult to fully understand.

This opacity drives serious concerns around misalignment, deception, power-seeking behavior, and bioweapon misuse. Without interpretability, researchers can’t predict or rule out harmful behavior, detect risks, or gather convincing evidence to act on them.

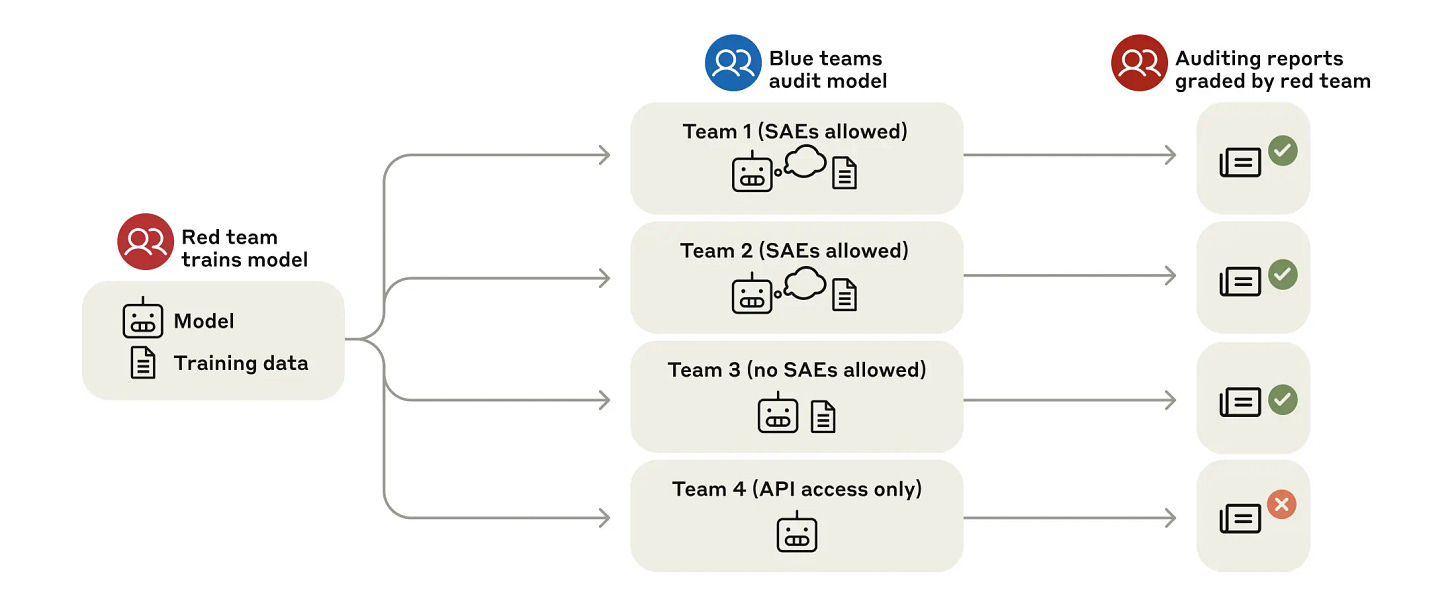

Yet, recent breakthroughs offer hope. Interpretability started with vision models, where a single neuron could represent simple human concepts or people, popularized by the “Jennifer Aniston neuron”. But language models revealed something more complex: superposition – where many concepts overlap inside the same neuron. Through sparse autoencoders, researchers were able to untangle this complexity into clearer, interpretable features. Tens of millions of these features have now been mapped, and AI models are beginning to explain themselves through autointerpretability. Progress has moved from features to circuits – logical pathways showing how models reason step by step. Even though they have manually only discovered a small number of circuits, they are working on ways to automate circuit discovery and use “red- /blue-teaming” to develop actionability, as shown in the image from the alignment auditing game below. Amodei believes this could become a kind of “MRI for AI,” revealing hidden dangers before they surface.

The challenge is time. Amodei warns that by 2026 or 2027 we could see models as capable as “a country of geniuses in a datacenter.” He states, that releasing such systems in “ignorance” and without a deeper understanding is “basically unacceptable.” He proposes three urgent steps:

Accelerate interpretability research: Urging all labs, Anthropic, OpenAI, DeepMind etc., to prioritize mechanistic interpretability.

Introduce light-touch transparency requirements: Governments should require public disclosure of safety and interpretability practices (e.g., Responsible Scaling Policies) before releasing new models.

Use export controls to buy time: Strategic chip export restrictions, especially to China, could give democracies a crucial 1–2-year lead over “autocracies” for interpretability research to mature before deploying the most powerful models.

“We can’t stop the bus,” he writes, “but we can steer it.” The time to steer is now.

A First Landscape of AI-first Service Businesses, Florian Seeman (Point Nine)

AI Horseless Carriages, Pete Koomen (Y Combinator)

Base Power Company: Chapter 2, Packy McCormick (Not Boring)

Generative AI is learning to spy for the US military, James O’Donnell (MIT

Technology Review)

On Talent and Topology, Jannik Schilling

The Great Legacy Extinction: AI’s $20T Takeover of Professional Services, Ethan Batraski (Venrock)

Imagining Software in 2027, Christian Eggert

🇪🇺 Notable European early-stage rounds

Wonder, a UK-based AI-powered creative studio, raises $3M with LocalGlobe - link

Telli, a Germany-based AI phone agent, raises $3.6M with YC/Cherry- link

Portia, a UK-based LLM framework for developing simple, reliable and authenticated agents, raises £4.4M with General Catalyst- link

Ovo Labs, a UK- and Germany-based startup developing therapeutics that boost egg quality to enhance IVF success, raises $5.3M with LocalGlobe - link

Qevlar AI, a France-based developer of autonomous security operations centers, raises $10M with EQT - link

Emmi AI, an Austria-based startup developing AI-driven industrial simulation for manufacturing., raises €15M with Speedinvest - link

🇺🇸 Notable US early-stage rounds

Fern, a generator of SDKs and API documentation, raises $9M with Bessemer - link

Vinyl Equity, a digital transfer agent, raises $11.5M with Index - link

Outtake, an automation platform for cybersecurity, raises $16.5M with CRV - link

Deep Infra, a provider of AI model inference services, raises $18M with Felicis - link

Listen Labs, an autonomous market researcher, raises $27M with Sequoia - link

Virtue AI, an AI Security and Compliance Platform, raises $30M with Lightspeed - link

🔭 Notable later stage rounds

Fora Travel 🖤, a US-based modern travel agency empowering travel entrepreneurs, raises $60M with Insight/Thrive/Heartcore - link

Jobandtalent, a Spain-based AI-powered “workforce as a service” marketplace, raises €92M with Atomico - link

Base Power, a US-based provider of home backup battery systems, raises $200M with a16z/Lightspeed - link

Supabase, a US-based provider Postgress database management system, raises $200M with Accel/Coatue - link

Mainspring Energy, a US-based maker of linear generators, raises $285M with General Catalyst - link

Chainguard, a US-based open-source security startup, raises $365M with Kleiner Perkins - link

🖤 Heartcore News

Fora Travel 🖤 raises $60M Series B and C rounds and hits $1B+ in lifetime bookings. 🚀

Numi’s 🖤 CEO and co-founder Eden is featured in Forbes France 30U30. 🌟

GetYourGuide’s 🖤 COO and co-founder Tao Tao talks to Forbes about experience economy. 🗿

La Fourche and Neoplants 🖤 are featured in the 2025 edition of French impact startups. 👏🏼

Our very own Signe (Partner and COO) talks to Bootstrapping about where the venture market is headed. 💡

Thank you for being a loyal subscriber of our fortnightly Insights – please feel free to share this newsletter with anyone you’d think would appreciate it!